9-10 April 2024 See you at Dx3 in Canada Toronto, the Retail, Marketing and Tech event!

Let data make value

E-commerce scraping

The dropshipping company needed a way to automatically monitor prices and stock availability for over 100,000 products from over 1,500 stores. We created a system using custom scripts and a web interface that could check 60 million pages daily. This led to a reduction in manual work and errors, and improvements in customer experience and a $50-70k increase in monthly profits.

1000h+

manual work reduced

60 mln

pages processed daily

About the client

The dropshipping company specializing in home furniture deals with more than 130k orders monthly. The retailer (supplier) owns more than 1.5k local stores with varying prices on the same products.

Tech stack

Python

Pandas

PostqreSQL

Elasticsearch

GCP

The client's needs

Challenges & solutions

Challenge

Keep infrastructure charges below $1000 per month.

Automatize daily price relevance and stock availability monitoring for more than 100k products through the entire network(approximately 18 mln requests per day).

Reduce the amount of manual work and operational errors during updates and new products listings.

Solution

Developed a full monitoring process covered by custom scripts through distributed server architecture.

Challenge

Add cross-checks of the same products listed on Amazon, Walmart, Lowe’s, etc.

Create a system for monitoring all products available looking for ones with the biggest price variations.

Solution

Created a solution for controlling the system via web interface.

Challenge

Create a notification system that automatically notifies the admin about order problems, changes, delays and controls orders statuses and shipments.

Solution

Created an algorithm to monitor more than 60 mln pages daily.

6 employees were discharged saved 1000 hours of manual work monthly.

Challenge

Automatize cashback website tracking, adding an option to identify orders with no/incorrect cashback to avoid missed orders.

Solution

Improved customer experience. Reduced the number of canceled orders by 69%.

Challenge

Create daily reports with detailed statistics.

Automatize updates and new listings at the selling platform.

Solution

Tailor-made algorithm for the product's price arbitrage gave an additional positive impact by $50-70k per month.

Challenge

Solution

Challenge

Solution

Challenge

Solution

Results

They always find cutting-edge solutions, and they help bring our ideas to life.

Jonathan Lien

CEO

Advanced Clear Path, Inc., E-commerce Company

Steps of providing

data scraping services

Step 1 of 5

Free consultation

It's a good time to get info about each other, share values and discuss your project in detail. We will advise you on a solution and try to help to understand if we are a perfect match for you.

Step 2 of 5

Discovering and feasibility analysis

One of our core values is flexibility, hence we work with either one page high level requirements or with a full pack of tech docs. At this stage, we need to ensure that we understand the full scope of the project. Receive from you or perform a set of interviews and prepare the following documents: list of features with detailed description and acceptance criteria; list of fields that need to be scraped, solution architecture. Ultimately we make a project plan which we strictly follow. We are a result-oriented company, and that is one of our core values as well.

Step 3 of 5

Solution development

At this stage, we develop the scraping engine core logic. We run multiple tests to ensure that the solution is working properly. We map the fields and run the scraping. While scraping, we keep the full raw data so the final model can be enlarged easily. Ultimately we store data in any database and run quality assurance tests.

Step 4 of 5

Data delivery

After quality assurance tests are completed, we deliver data and solutions to the client. Though we have over 15 years of expertise in data engineering, we expect client’s participation in the project. While developing and crawling data, we provide midterm results so you can always see where we are and provide us with feedback. By the way, a high-level of communication is also our core value.

Step 5 of 5

Support and continuous improvement

We understand how crucial the solutions that we code for our clients are! Our goal is to build long-term relations, so we provide guarantees and support agreements. What is more, we are always happy to assist with further developments and statistics show that for us, 97% of our clients return to us with new projects.

How we provide data integration solutions

Step 1 of 5

Free consultation

It's a good time to get info about each other, share values and discuss your project in detail. We will advise you on a solution and try to help to understand if we are a perfect match for you.

Step 2 of 5

Discovering and feasibility analysis

One of our core values is flexibility, hence we work with either one page high level requirements or with a full pack of tech docs.

At this stage, we need to ensure that we understand the full scope of the project. We receive from you or perform a set of interviews and prepare the following documents: integration pipeline (which data we should get and where to upload), process logic (how system should work); use cases and acceptance criteria; solution architecture. Ultimately we make a project plan which we strictly follow.

At this stage, we need to ensure that we understand the full scope of the project. We receive from you or perform a set of interviews and prepare the following documents: integration pipeline (which data we should get and where to upload), process logic (how system should work); use cases and acceptance criteria; solution architecture. Ultimately we make a project plan which we strictly follow.

Step 3 of 5

Solution development

At this stage, we build ETL pipelines and necessary APIs to automate the process. We attract our DevOps team to build the most efficient and scalable solution. Ending up with unit tests and quality assurance tests to ensure that the solution is working properly. Focus on Results is one of our core values as well.

Step 4 of 5

Solution delivery

After quality assurance tests are completed, we deliver solutions to the client. Though we have over 15 years of expertise in data engineering, we are expecting client’s participation in the project. While developing the integration system, we provide midterm results so you can always see where we are and provide us with feedback. By the way, a high-level of communication is also our core value.

Step 5 of 5

Support and continuous improvement

We understand how crucial the solutions that we code for our clients are! Our goal is to build long-term relations, so we provide guarantees and support agreements. What is more, we are always happy to assist with further developments and statistics show that for us, 97% of our clients return to us with new projects.

Steps of providing web applications services

Step 1 of 7

Web development discovery

In the initial stage of the web-based development project, professional business analysts make detailed documentation of the project requirements and the approximate structure of the future web application. DATAFOREST is a custom web application development agency, guided by extensive experience in multiple industries. We give you detailed project documentation and then assemble the team according to your time and budget.

Step 2 of 7

UX and UI design

Based on your wishes, the needs of your target audience, and the best web application design and development practices, our UX and UI experts create an aesthetically pleasing and user-friendly interface for your app to satisfy even the most demanding users.

Step 3 of 7

Web-based application development

At DATAFOREST we are following the best programming design principles and approaches. Being a data engineering company, we build high load platforms, with a significant level of flexibility and result orientation. We keep our deadlines and follow SOC 2 compliance requirements.

Step 4 of 7

Integration

With DATAFOREST, integrating the application into your business won’t stop your processes for a minute. We provide seamless integration with your software infrastructure and ensure smooth operation in no time.

Step 5 of 7

Quality assurance

We use a multi-level quality assurance system to avoid any unforeseen issues. Working with DATAFOREST, you can be confident that your web app development service solutions arrive to the market polished and in full compliance with all certification requirements.

Step 6 of 7

24/7 support

Once a product is released to the market, it’s crucial to keep it running smoothly. That’s why our experts provide several models of post-release support to ensure application uptime and stable workflows, increasing user satisfaction.

Step 7 of 7

Web app continuous improvement

Every truly high-quality software product has to constantly evolve to keep up with the times. We understand this, and therefore we provide services for updating and refining our software, as well as introducing new features to meet the growing needs of your business and target audience.

The way we deal with your task and help achieve results

Step 1 of 5

Free consultation

It's a good time to get info about each other, share values and discuss your project in detail. We will advise you on a solution and try to help to understand if we are a perfect match for you.

Step 2 of 5

Discovering and feasibility analysis

One of our core values is flexibility, hence we work with either one page high level requirements or with a full pack of tech docs.

In Data Science, there are numerous models and approaches, so at this stage we perform a set of interviews in order to define project objectives. We elaborate and discuss a set of hypotheses and assumptions. We create solution architecture, a project plan, and a list of insights or features that we have to achieve.

In Data Science, there are numerous models and approaches, so at this stage we perform a set of interviews in order to define project objectives. We elaborate and discuss a set of hypotheses and assumptions. We create solution architecture, a project plan, and a list of insights or features that we have to achieve.

Step 3 of 5

Solution development

The work starts with data gathering, data cleaning and analysis. Feature engineering helps to determine your target variable and build several models for the initial review. Further modeling requires validating results and selecting models for the further development. Ultimately, we interpret the results. Nevertheless, data modeling is about a process that requires lots of back and forth iterations. We are result focused, as it’s one of our core values as well.

Step 4 of 5

Solution delivery

Data Science solutions can be a list of insights or a variety of different models that consume data and return results. Though we have over 15 years of expertise in data engineering, we expect client’s participation in the project. While modeling, we provide midterm results so you can always see where we are and provide us with feedback. By the way, a high-level of communication is also our core value.

Step 5 of 5

Support and continuous improvement

We understand how crucial the solutions that we code for our clients are! Our goal is to build long-term relations, so we provide guarantees and support agreements. What is more, we are always happy to assist with further developments and statistics show that for us, 97% of our clients return to us with new projects.

The way we deal with your issue and achieve result

Free consultation

Step 1 of 5

It's a good time to get info about each other, share values and discuss your project in detail. We will advise you on a solution and try to help to understand if we are a perfect match for you.

Step 2 of 5

Discovering and feasibility analysis

One of our core values is flexibility, hence we work with either one page high level requirements or with a full pack of tech docs.

Depending on project objectives, DevOps activity requires auditing the current approach, running metrics measurement, performing monitoring and checking logs. By having a set of interviews, we ensure that we understand the full scope of the project. Ultimately we make a project plan which we strictly follow. We are a result-oriented DevOps service provider company, and that is one of our core values as well.

Depending on project objectives, DevOps activity requires auditing the current approach, running metrics measurement, performing monitoring and checking logs. By having a set of interviews, we ensure that we understand the full scope of the project. Ultimately we make a project plan which we strictly follow. We are a result-oriented DevOps service provider company, and that is one of our core values as well.

Step 3 of 5

Solution development

At this stage, our certified DevOps engineers refine the product backlog. We deliver great results within digital transformation, cost optimization, CI/CD setup, containerization, and, last but not least, monitoring and logging. We are a result focused company – it’s one of our core values.

Step 4 of 5

Solution delivery

After quality assurance tests are completed, we deliver solutions to the client. Though we have over 15 years of expertise in data engineering, we expect client’s participation in the project. By the way, a high-level of communication is also our core value.

Step 5 of 5

Support and continuous improvement

We understand how crucial the solutions that we code for our clients are! Our goal is to build long-term relations, so we provide guarantees and support agreements. What is more, we are always happy to assist with further developments and statistics show that for us, 97% of our clients return to us with new projects.

Success stories

Check out a few case studies that show why DATAFOREST will meet your business needs.

All Success Stories

Data parsing

We helped a law consulting company create a unique instrument to collect and store data from millions of pages from 5 different court sites. The scraped information included PDF, Word, JPG, and other files. The scripts were automated, so the collected files were updated when information changed.

14.8 mln

pages processed daily

43 sec

updates checking

Sebastian Torrealba

CEO, Co-Founder

DeepIA, Software for the Digital Transformation

View case study

These guys are fully dedicated to their client's success and go the extra mile to ensure things are done right.

Demand forecasting

We built a sales forecasting system and optimized the volume of goods in the warehouse and the range of goods in different locations, considering each outlet's specifics. We set up a system that has processed more than 8 TB of sales data. These have helped the retail business increase revenue, improve logistics planning, and achieve other business goals.

88%

forecasting accuracy

0.9%

out-of-stock reduced

Andrew M.

CEO

Luxury Goods Retail

View case study

I think what is really special about the DATAFOREST service is its flexibility, openness, and level of quality and expertise.

Supply chain dashboard

The client needed to optimize the work of employees by building a data source integration and reporting system to use at different management levels. Ultimately, we developed a system that unifies relevant data from all sources and stores them in a structured form, which saves more than 900 hours of manual work monthly.

900h+

manual work reduced

100+

system integrations

Michelle Nguyen

Senior Supply Chain Transformation Manager

Unilever, World’s Largest Consumer Goods Company

View case study

Their technical knowledge and skills offer great advantages. The entire team has been extremely professional.

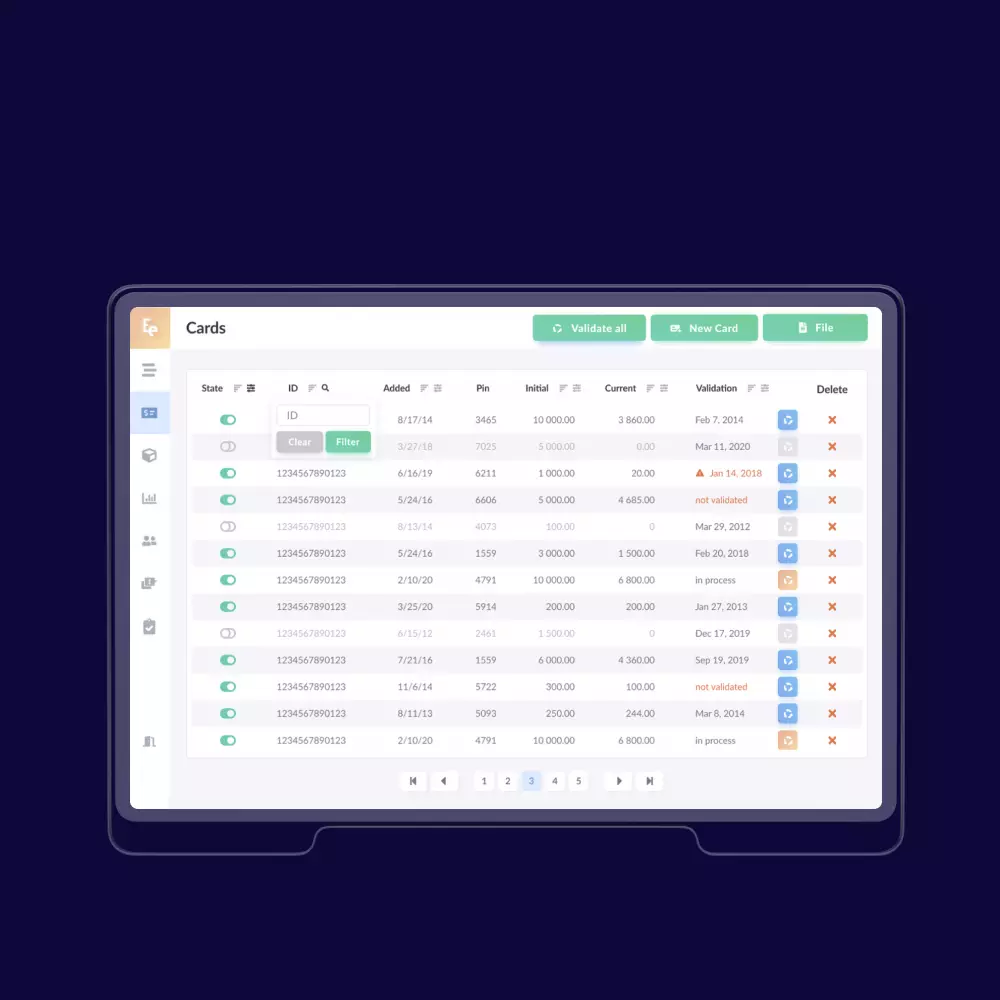

Web app for dropshippers

The Client wanted to create a web app for people who sell products online (dropshippers) to show them the most popular products and calculate the potential profits. DATAFOREST designed and built the web app from scratch, creating high-load scraping algorithms to extract data from different e-commerce marketplaces, developing AI algorithms to calculate profits, and integrating the payment system with various functionalities.

100k+

hourly users

1,5 mln+

Shopify stores

Josef G.

CEO, Founder

Software Development Agency

View case study

If we experience any problems, they come back to us with good recommendations on how the project can be improved.

All Success Stories

We’d love to hear from you

Share the project details – like scope, mockups, or business challenges. We will carefully check and get back to you with the next steps.

Thanks for your submission!